-

Posts

624 -

Joined

-

Last visited

Content Type

Forums

Blogs

Knowledge Base

Posts posted by Beq Janus

-

-

2 hours ago, OptimoMaximo said:

I guess that passing any number of triangles to the gpu would work on its blackface feature. This means that whatever gets culled is not being sent, and the shader on the gpu renders backfaces of those that aren't culled. If the system is not organized like this, that's something that needs to be implemented, because it can be done and it's nothing new. I don't see the problem with keeping the culling mechanism and send the result to gpu to perform its operations. Unless, as usual, LL code has subpar limitation in this regard, which I can't be aware of.

They cannot be culled efficiently, backface culling is simple, is the normal pointing away from the camera, yes, great nothing to do here. In some cases that may be done on CPU avoiding it at the earliest possible opportunity or in other on the GPU. With double-sided faces you have no such test, with rigged mesh we cannot rely on depth sorting ot come to our aid and with alpha blend we'd not even have that anyway, so overall it will result in more overdraw, more time wasted, more unwanted load on the GPU.

It is entirely possible that there is something more cunning planned that will alleviate this; my fingers are crossed in anticipation.

As I have implied before, I don't disagree that there are cases where this feature has benefits, but as always we have chosen to add new footguns to the creators' arsenal and loaded them, without putting in place any gunlaws or guidelines on how they should be properly used. The end state will be an overall increase in time wasted due to poorly defined assets and a loss of scene performance that goes hand in hand with it, all for a rather niche feature.

-

Just now, Ardy Lay said:

I have been reading this thread and am left wondering how long is the time between the window closing and the last viewer related file being closed? I tried several times to "break" mine but did not accomplish this.

It varies by avatar. If you enable the "Inventory" debug logging (through logcontrol.xml) you will see the start and end of the writes. You need to zap it in between those and keep in mind that there's a good chance you won't notice. Take a look at the number of items written to the cache on a good exit. Then on a failed, it will be smaller, how much smaller depends on how badly it got interupted and whether you "care" about the items "missing" is entirely chance.

The original bug manifested if the shutdown (or the restart) occurs while the <your avatar UUID>.inv.gz is being constructed, this takes a few forms as we write to a plain text version, then pass it off to be zipped. When the viewer starts it tries to carry on as best it can and can end up with a truncated inventory cache. Times will vary, the larger your inventory, the slower your disk, CPU, etc.

-

1

1

-

-

Coming back to this thread after what feels like a lifetime.

The forthcoming Firestorm will no longer be prone to this issue. The basic fix was simple enough but it turned out to be a merry dance that consumed most of the Christmas period.

The basic issue was as I mentioned in my 1st December post. However that fix itself uncovered a veritable nest of vipers that resulted in the final fix being somewhat different (in some ways simpler).

To recap, the issue was that the new "fast shutdown" was in fact snake oil, the speedy closing of the main window was I think not really an intended result, but was perhaps seen as a nice side-effect, of moving many of the ancillary functions of the viewer into separate threads of execution (allowing the viewer main thread to spend more time drawing stuff). However, intended or not, the vanishing of the window just hid the fact that the viewer was still busy scurrying around in the background writing the caches out to disk and cleaning up. In Windows, the "premature shutdown" defence only applies to processes that have a UI and thus having killed the UI the operating system consider it fair game to kill the viewer and this was resulting in corrupted inventory files. Furthermore, the lack of a UI also signalled to us, human(-ish) operators, that we were free to restart another instance and that could also trigger issues.

The original "fix" resolved the inventory issue, but led to a number of people see the viewer hanging during the logout process. Not being able to reproduce this problem I relied on the valuable and patient assistance of @Rick Daylightand a couple of others to help test various iterations of fixes and debug builds as I tried to locate the hanging.

This is now complete, the fix overall is underwhelming and simple compared to the journey taken to find it! But the result is that we should now find that inventory caching on shutdown is far more reliable.

There is a downside, the so-called "fast shutdown" myth is no more. The viewer will now pause as it always used to while it writes out the various caches. There may be scope to speed this up in future, but for now you will see the extended pause at shutdown, but remember that this is telling you that the viewer is still busy.

Addendum - for those technically minded readers

The hangs I had to fight were as a result of the shutdown order of the many event queues used and the fact that they were shared between threads that had potentially different life spans. In particular, the windows thread would continue to read and produce events (mouse movements, clicks etc) that get forward to other queues to be processed by parts of the viewer (including the main thread) when those threads stop reading then the queues fill up, the windows thread then blocks waiting for space to be made, space that will never come. Meanwhile the main thread is waiting for the windows thread to finish so that it can exit, resulting in a classic deadlock.

-

4

4

-

5

5

-

-

The requested Undo is not at all simple to do because these are not viewer operations. All inventory changes are transactions that need to be sent to the asset server and as such unwinding them would require an inverse set of transactions to be performed, in the meantime, the network is carrying other updates that may also change the state of the inventory, potentially clashing with the history that the viewer window is aware of.

There are data processing mechanisms used in real world systems to give more robust rollback semantics, they typically require either a journaling system (where each change applied is recorded as a distinct operation) or at its simplest level versioned inventory, that would invalidate your "undo" history should any external events occur in the intervening period. None of these mechanisms exist, and would require explicit implementation on the server side as the viewer cannot be aware of all the updates.

I've not thought too long and hard on this, perhaps there is a simple and limited way that simple fat-finger errors can be rolled back and I'm happy to hear thoughts on that, but I for one would be rather wary of heading too far along that path without express Linden Lab support. As always, a feature request Jira on the SL Jira, especially one that fleshes out the desired use case would be the best start.

-

3

3

-

-

On 12/30/2022 at 10:27 PM, ChinRey said:

Also having double sided as a material property makes it more flexible, rather than having the entire mesh double sided, or not

Actually this is not true, though I guess you may be considering what is a "mesh" as different to what I consider a "mesh" to be.

In the Viewer every material face is a separate mesh, thus an object composed of a single item (no links) and 6 materials is 6 separate meshes. By definition, making the material double-sided makes that entire mesh double-sided.

This is in contrast to have individual triangles replicated where necessary by a creator that is doing their job properly.

ETA: The Quote says it was @ChinRey but I think I quoted her quote of @arton Rotaru (sorry both)

-

On 12/31/2022 at 6:22 PM, OptimoMaximo said:

A property like this makes more sense in a shader rather than a shape. The shader can simulate something at render time, which is lighter weight than generating the back faces from a geometry node: the former simulates on screen starting from the actual geometry, the latter should actively duplicate and flip the target geometry.

That is the point, for this to be done on the GPU means that those triangles that would previously have been culled earlier in the pipeline now have to pass all the way through. You cannot simply say "oh but its GPU" that is not how it works.

I don't disagree with Arton's point that there is darkness and light in all. Until I see otherwise my belief is that this path leads to more shadow than sunshine. In an environment where content quality control is nonexistent and creator skill and conscience is highlyt variable, the downside to this seems greater than the potential upside. I hope the creator community will prove me wrong.

-

-

2 hours ago, ChinRey said:

Are they seriously going to introduce mandatory backfaces??? If anybody has any influence on the developers at all, stopping this is first priority!

Not mandatory, in fact not even within control of the mesh creator which is why the glTF spec seems to me to have this arse-about-face. Ultimately you get the most efficient solution when the mesh is aware of how it should be rendered, not dictated by the "paint" that you apply to it. However, that is how glTF has expressed it and thus that is where we are heading.

It is clear that the last thing we want is LL creating arbitrary specifications of their own and reinventing wheels that were already there. However, we appear to have taken "a standard for transmitting assets" and munged that with the rendering of them, and it is in that grey area that the demons are lurking.

-

16 hours ago, OptimoMaximo said:

Well, just leave that checkbox out of the permission system and the problem is non existent.

Yes, this has been requested, it is entirely out of the hands of the viewer devs though.

-

1 hour ago, OptimoMaximo said:

This comes from the assumption that such materials are to be used for foliage with translucency. The same happens within UnrealEngine as well as Unity or Godot. I'm not sure, but it should have to do with how translucency changes its method to calculate transitioning light when double sided is set to true.

Yes, an "assumption" of usage that has become enshrined in a "standard" for transporting material information and which is entirely detached from the application of the material. In a more usual scenario, the creator is responsible for and accountable for the efficiency of their content. In SL, sadly, there is absolutely no accountability for shoddy, laggy creations, and we also have the situation where we are creating a secondary market for materials where the end use cannot be guessed by the creator and unless the platform enforces modify capability on materials we will end up with double sided materials being the norm "just in case".

No doubt it will be the fault of the viewer that everything grinds to a halt again.

-

On 12/27/2022 at 7:00 PM, Katherine Heartsong said:

One other suggestion/question ... why do you have HUDs open on your screen for anything?

Open them, use them, then detach/close them. I never have any HUDs open on screen, they add to my avatars weight, sometimes to the tune of 10-20K.

Removing HUDs is good advice, so many people run around with the worst of all huds their head and body alpha huds constantly consuming space. If you really want quick access to those use the "favourite wearables" shortcut to have them no more than two clicks away. alternatively use alt-shift-h to simple not render huds.

I don't agree with the "avatar weight" nonsense as a reliable guide to anything, never have done, unlikely I ever will; however HUDs are a demonstrable consumer of render time and will affect your FPS especially in texture heavy areas where the always texture hungry HUD has to compete with the scene for VRAM and other resources.

-

5

5

-

-

Double-faces are a double-edged sword, be careful what you wish for.

In theory the way that it works toady the creator doubles up the mesh faces to create an inside /lining. The viewer can (in theory) eliminate these when they are not visible by back face culling. This also means that as in the real world we can have a different appearance on the inside than on the outside as the mesh is separate.

With the (apparently) much wanted, double faced solution in PBR we have (as I understand it at present) the worst of all worlds. The unseen faces cannot be culled because they are double-faced. thus we have increased overdraw and wasted effort. The PBR solution is wed to the rather braindead GLTF standard view that the "faced-ness" of an object is an attribute of the material and not of the mesh itself. Thus if I am making a material for "tarnished copper" and I decide that it should be double-sided in case someone wanted to make (for example) a copper bucket that was a single mesh skin, then every time I make a tarnished copper object with that material it will (by default) have a backface even if that face was entirely occluded. End-users will be buying materials and applying them unaware of this. The "solution" is to ensure that the "double-sided" attribute is always no-mod so that wise creators can use it appropriately. However, what we are really doing is making it easier to make poor performing content and removing options to optimise this in the viewer through automation.

I fear that we are about to make content even less efficient to render. Ho hum

-

3

3

-

1

1

-

-

Hi all

Very quick reply as it is stupidly late/early and anything I write will probably be turned to gibberish by my fingers.

1) Physics crashing on SL (and FS). I have recently fixed this bug. It turns out that the code in the uploader has always assumed that the physics shape has weights on it (even though that makes no sense at all in SL), it does not check for this and as a result if you pick the cube shape or the "bounding box" option in the LL viewer, then the viewer will explode spectacularly when you calculate the costs. This is fixed in the latest FS preview (join the FS preview group to get access) and should be released soon-ish.

2) rigging of LODS, I'm not entirely sure what we are aiming for here, first thing I will note, from the images above we can see that the joint offsets were not being displayed in the preview for some of the LOD images. That could well just be a mistake while documenting the issue however. As for rigging of LODs, I can't recall right now the exact nature, I'll review it when the christmas visitation of relatives cease and normality returns. IIRC (and I may well not do so) we have just one set of weights that we abide by, and these are loaded with the high lod; all lower lod models are assigned weights using an algorithm that finds the nearest vertex in the lower lod models. I am casting doubt on my own recollections here though as I cannot with any certainty say if it does this for custom LODs that have weights with them or only for generated LODs. Given the ridiculous bug-ridden state of LOD switching with rigged mesh most people only ever use auto lods because the other LODs are highly unlikely to be seen. I applaud anyone trying to do the job properly, but it would not surprise me to find that things are not quite as you'd like/expect but that nobody has noticed!

-

1

1

-

-

On 12/23/2022 at 6:00 PM, ChinRey said:

Somebody on the Firestorm dev team has a weird sense of humour.

/me takes a bow and grins.

I chose that name, not as a cruel joke, but for a lack of better words to describe it. The reason I settled on reliable is because (whether you like the results or not - I am certainly not suggesting they are good) GLOD reliably produces something that is close to what you told it to aim for, and is what you have used in the past. It will reliably adhere to your request to crate very low poly (awful looking) LODs. MeshOptimiser on the other hand is bloody awful in about 2/3 of all cases that I tested, often resulting in excruciatingly bad LODs that cost the unobservant creator vast amounts of Lindens for unusable uploads. It almost completely ignores the target numbers, taking them as a guide rather than a limit. GLOD is also (typically) far more reliable when it comes to retaining UVs, whereas both auto (which is really just a wrapper around precise and sloppy) and sloppy pretty much destroy your UVs.

I am happy to explore other options that people feel are worth having. They need to be freely distributable of course, and be able to be used from within the viewer.

MeshOptimiser is not a bad library, the way that the uploader is setup to use it though is far from ideal, to the point where I requested it not be released with the performance viewer update, but kept separate so that people could focus on it as a beta and perhaps nudge it in the right direction. I did not feel it was ready, which is reflected also by my decision to reinstate the GLOD library.

-

2

2

-

-

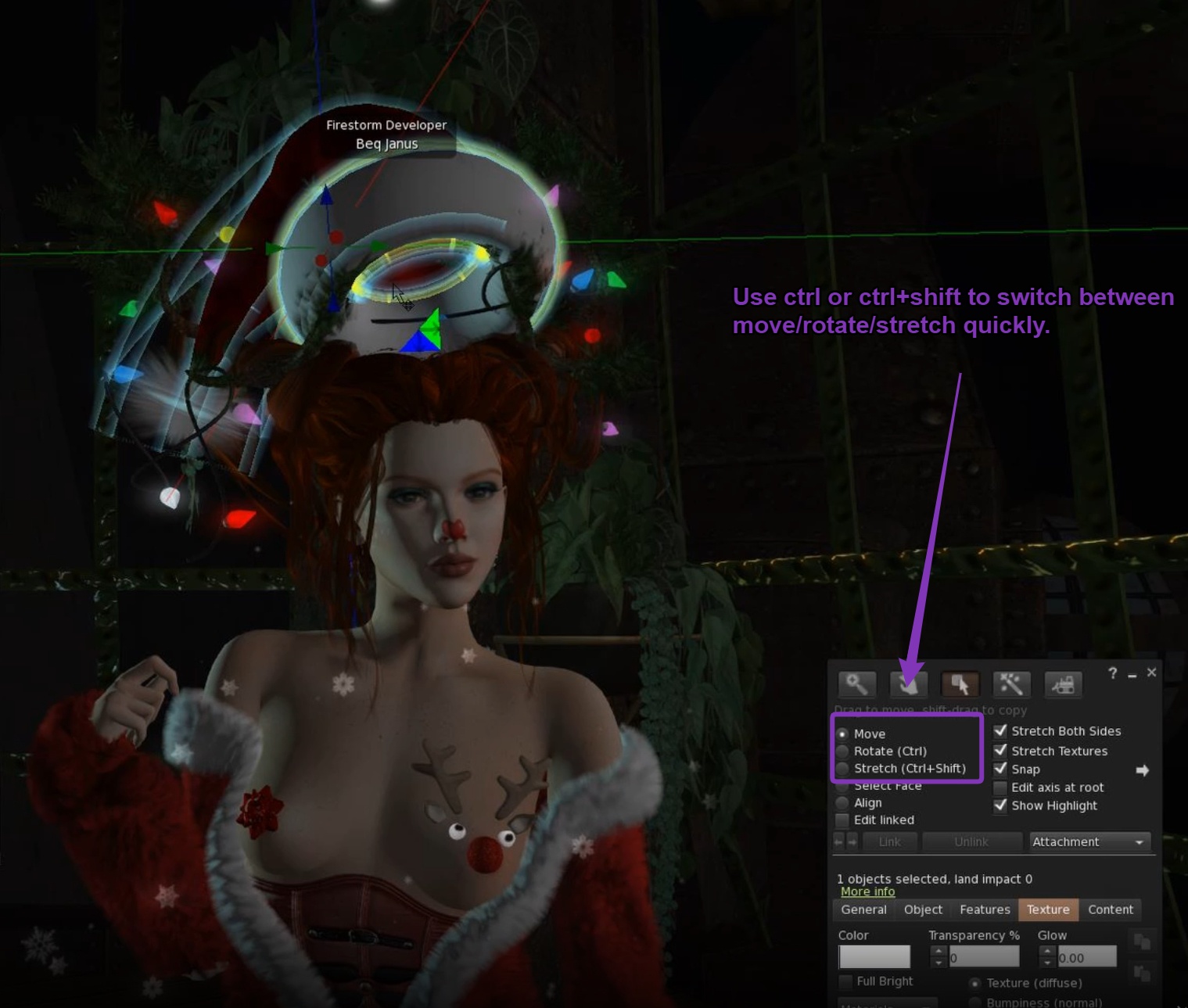

Unrigged hat

in Mesh

As @Wulfie Reanimatorsays, attach to your skull, chin or nose. somewhere near where you want it and importantly a place that moves with your head. Being unrigged the hat will not follow movement in the same way as a rigged mesh one would. The hat may not be correctly positioned. right click on the hat, and select edit. You will see arrows that will allow you to move it to where you wold like it to be.

This movie clip shows me editing a random old prim hat. https://i.gyazo.com/1fea6bf74e50cc9675df5904bd36ff8b.mp4

by default the arrows will move the item, if you hold the control key down, you will see rings appear allowing you to rotate it.

you may also need to resize it, hold down ctrl and shift and you'll see white blocks on the corners, dragging these will stretch and shrink the item.

-

1

1

-

-

6 hours ago, Rick Daylight said:

I've just been trying to reproduce similar things in the official viewer... I can't. At least not in half an hour, yet in FS it's instant. In fact in the official viewer, spinning around in the middle of my lawn as quickly as I can (after waiting a good while facing one way) shows no delay at all in drawing what was behind me. It's the 'seamless' that I said it should be in my OP. Unlike when I do that in Firestorm and the ground cover plants take a second to show up as I cam around to them.

Sorry, should have caught this in my last reply. this is interesting, as given the LOD behaviour seems to be part of this, the LL viewer should be decaying sooner. If this is an "instant" thing in FS, please reach out to me with an LM so that I can try. Due to RL I won't realistically get to do more than just look at this until next Wednesday but having somewhere it is likely to reproduce is worth seizing.

-

1

1

-

-

18 hours ago, Rick Daylight said:

I'm sure there must be Jiras covering these things already, or I would file some. I know I'm not the only one.

There are some "related" Jira. This has certainly become more common since performance improvements but I've tried to find similar issues from earlier releases too. One of the problems is that there are many different reasons that an object might not show up and reproducing these bugs so that we can fix them is really the key. The more reports I have to compare and contrast the more it helps. I know that people get frustrated being asked to log Jiras (and I don't mean you here Rick, I mean in general) but I cannot understate how useful it is to have a good solid report of a bug especially if there is a way to reproduce it that we can try.

A quick skim of Jira found https://jira.firestormviewer.org/browse/FIRE-32302 and https://jira.firestormviewer.org/browse/FIRE-32220

I've tried to reproduce these by driving around the tracks but either there is something about my system that refuses to repro, or (more likely) I am just too rubbish at driving to get a smooth enough lap 🙂

We also have (linked to those) https://jira.firestormviewer.org/browse/FIRE-32382

I doubt these are specific to Firestorm (though I cannot rule it out) it is more likely the result of the the recent upstream changes but until we can reliably reproduce it and then test it across viewers it will just sit with us and we will deal with it when we can.

@Whirly Fizzleare you aware of other Jira's that Rick might want to look at to see if they relate to his issue. He can of course create another but collecting things together under one report is always nice. I worry that with the Lab busily trying to get PBR released, when we are still firefighting a raft of bugs introduced by the last major update that we're going to take a lot of the shine off of the excellent improvements by the loss of reliability.

@Rick Daylight you say that you "cap at 50" can you confirm that this cap is not achieved by using Vsync please? VSync should be avoided in almost all cases. If you want to limit frame rate then use the viewers own fps limit which is far more reliable. (I also intend to take a look at @Henri Beauchamp's fps limit when time allows as that is a little more responsive as it manages the perceived frame rate separately to the background services, but in any case the in-built viewer limit will give you a far better result than vsync). VSync sucks because you do not get sync'd to the refresh rate but to some factor of the refresh rate. If you are hoping for 50fps, then the screen needs to draw every 20ms, with lame old school vsync if it take 21ms then you missed the vsync event and it will pause until the next one, dropping you to 25fps, causing input jitter and all kinds of other annoyances. VSync - Just say no.

-

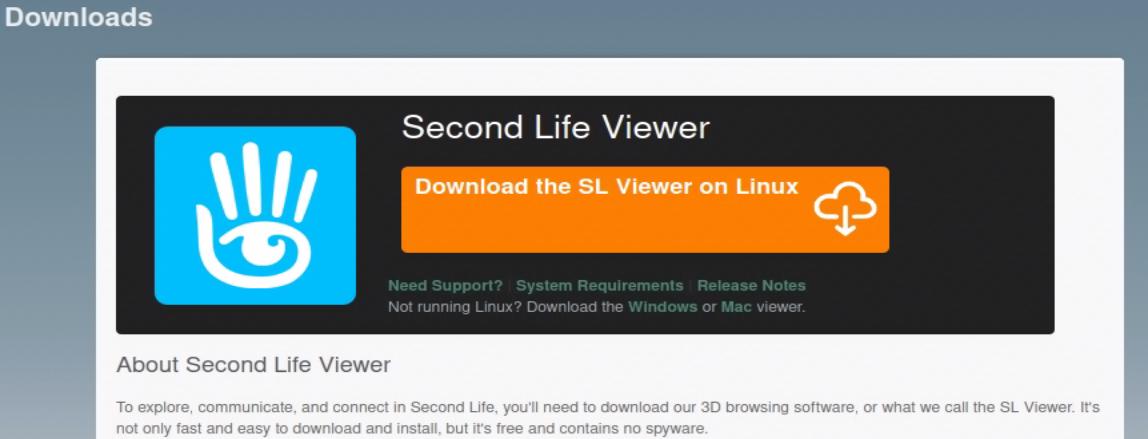

I would be more strident on this. That version of Second Life viewer should not be run, at all, ever. It is sad that it remains listed and available for download on the Linden Lab website as it gives a very poor impression of Second Life at a time when more Linux users are potentially appearing (I see a small but growing number running Firestorm on Steam Decks). I would consider it an unsafe download given the ancient SSL and CEF libraries, not to mention the fact that it lacks support for a number of core features that are central to a modern Second Life experience.

Just for those of you who think that Linux is no longer listed on the downloads page...that's because you are not running Linux. This is what you would see if you visited Secondlife.com on a SteamDeck or any other Linux-based device, looking to join up.

It would be worth shouting out @Vir Linden, Signal Linden and @Monty Linden and @Alexa Linden. While I accept that the effort to restore upstream (i.e. Linden Lab) support for Linux would probably not see a clear commercial return, (I think that there are indirect returns on the investment, through automation potential, etc.); it would be cheap (practically free really) to at the very least have the downloads page changed to reflect the realistic case that while LL do not directly support Linux, it is a platform that they recognise as being used by their customers and affirming that Linux users are fully supported by a number of actively developed TPVs such as Firestorm, CoolVL, Alchemy etc. The present state simply misleads these users into downloading a decrepit ancient viewer that then breaks and actively discourages them. Another lost customer that did not need to be lost.

-

5

5

-

1

1

-

-

Thanks @arton Rotaru

Put simply the whole thing is a bit of a lottery. It has been interesting over the last month or so. I came back from having been waylaid by RL and the need to earn money 🙂 over the summer to find that there were a number of different alpha rendering issues that emerged after the performance updates. I fixed some in FS and the Lab fixed the same ones and more in their Maint-N update, this in turn broke other things. So I've spent a while dancing circles around this stuff and playing bug whack-a-mole because the "correct behaviour" has never been defined; thus people find a thing that they think is a cool hack to make sure that their pet creation renders as they want, but then we innocently change some code and the house of cards comes crashing down.

There are a range of hacks such as the use of materials (blank or otherwise) to change the apparent render priority, the use of full bright for the same, even the use of alpha mask cutoff, when alpha blend is in use, all being treated as cast-iron rules, when in reality they are pure happenstance, a side-effect of the order in which the code runs. This "order" may be arbitrary and thus can change because we alter the way we store something, or just change the algorithm to make things faster, who knows simply changing the compiler could affect some of these nuances.

The attachment point agreement is step one of the route out of the dark ages. I hope by the end of the weekend to have a concrete solution as a proof of concept that will go a lot further and I will seek support from the Lab to make that a documented and stable feature for the foreseeable future. By this I mean that if your content follows the rules and breaks then we will fix the viewer, if you don't follow the rules then you'll need to fix the content. Moreover, if and when the day comes that rendering upgrades and tech changes mean that some part of the rules can no longer be supported, we viewer devs (or more correctly for such changes, LL) will at least be able to broadcast this fact and make a breaking change in the full knowledge of what it is breaking.

As with the attachment points, the plan is to get a working proof of concept, test it out and work out what the impact is on current content and then ask LL to adopt it; "Beq says so" should never be acceptable (or trusted)🙂 but "LL says so" is something you should be able to hang your hat on.

TL;DR at the moment, the ordering within a linkset and within the faces of a mesh/prim is not fully guaranteed, and even where an ordering exists or can be deduced, it is not underwritten to stay that way. I hope to get that resolved soon(tm)

-

3

3

-

1

1

-

-

On 11/24/2022 at 6:52 PM, animats said:

Oh, how is Shadow PC working out for you? Tell us more. If that's the only problem you have, their remote desktop thing is working well.

This is one way to run SL on anything that can run Youtube and Netflix. It's not cheap, but it's a solution.

I'd like to see SL supported on NVidia GEForce Now, which does roughly the same thing, but has lower prices.

I bought into Google Stadia as a founder in the hope that it would be open enough to allow us to explore FS on there. Sadly it was not made available in a way that I could justify trying to support it. A lot of people found the Old SL-Go useful back in the days before Sony bought it and killed the service. With Stadia now closing I am looking at GeForce Now as an alternative. It is not high on my list but definitely of interest.

-

1

1

-

-

36 minutes ago, Jackson Redstar said:

This though is is now seemed to be cleared up.... WHat I noticed as I do weddings, often moving the cam to where alot of people are the framerate drops like a rock (to be expected of course) but then struggles or never really recovers when moving back to a less crwded view. That and the ol nakey avi never rezzing fully seemed to be back again

I would double check for inventory corruption. There's another thread here discussing this issue and it is plausible that some of what you are seeing could be the result of a previous bad shutdown. Hopefully we'll have a new set of betas real soon.

-

1

1

-

-

Just returning here to update where we are.

I have recently pushed a change to Firestorm that resolves the most pressing issue, which is the inventory writing and shutdown. to my mind it is not really the best solution but the correct option is quite a lot more complicated and I'll review that in due course, perhaps with a view to doing something in FS or working with LL to get a more maintainable shutdown sequence.

For now at least there will now be a slightly longer pause at exit, the main window will not close until the inventory file has been written and zipped. This should resolve the immediate issue. Please note that if you have had any form of inventory loss in recent releases then I would recommend a one time cache clear once you get the next release to be sure that everything has been pulled in.

I'll put more detail into the Jira.

I am not a windows expert by any means, but from this investigation it seems that the reason that windows will allow the OS to shutdown while the viewer is still running is because the main window has been closed. As far as I have been able to establish, windows will give you the "the following processes are preventing shutdown" prompt ONLY if there is an active window. It does not, at least by default, block if a non-windowed process is active. This would explain the situation that we have seen.

Why is there no window? because the main window servicing was moved to a dedicated thread and that thread is allowed to terminate almost immediately upon the receipt of the quit request.

As @NiranV Deannoted earlier, there has always been, and arguably still is, a prospect of inventory corruption upon rapid restart. The fix I have committed makes this far less likely to occur with the OS shutdown scenario, and also reduces the likelihood of the rapid restart so long as the user waits for the previous instance to close the window. There is undoubtedly still a race condition if you start one instance up while shutting down another.

-

1

1

-

4

4

-

-

19 hours ago, Beq Janus said:

There is nothing that I can see that suggests that is how it is meant to behave at all. As I said in the response above, all the of cache writing should be happening on the main thread and there is is parallel activity that should cause early termination

Ironically, I think that @Jaylinbridgesyou were more correct than I initially thought although I am still digging. The only way I can repro is to do the aggressive reboot and that would appear to prove the "viewer carries on after shutdown" conclusion that a number of you had come to. There have been a lot of changes to the threading in recent times and in particular a lot more stuff has been moved into the the threadpools. When closing the viewer you need to make sure that all the threads are terminated before you release shared resources. These are therefore triggered upon the close signal. This is what seems to be closing down the visible traces of the viewer leaving the main thread still running. On my own system I struggled to get this to repeat so I added a slowdown into the cache writer and it is more obvious now.

It is worth noting that it is not just the inventory cache being corrupted. There are a number of other more minor things that are persisted after the cache and these will not happen when the rapid OS shutdown occurs either. Their effects are more subtle.

There are a number of things that need to happen now and I am exploring them.

1) The sequence of shutdown needs to be adjusted so that we have some visible indication that the viewer is running.

2) understanding why the OS is not flagging the process as busy when shutting down

3) make the writing and recovery more robust.

I am sure the lab will be looking into this too now that we have proven the reproducibility on the parent viewer.

-

6

6

-

2

2

-

-

10 hours ago, Jaylinbridges said:

I hope debugging files gives you an answer. Thanks for looking at the logout processes. But from another simple view, why does the LL and FS viewers shutdown instantly and return to the Windows desktop? We know the viewer is not really finished in less than 1 second after you hit that X to log out. What about the code that removed the prior shutdown window?

And why was it removed after years of having a proper shutdown window?

There is nothing that I can see that suggests that is how it is meant to behave at all. As I said in the response above, all the of cache writing should be happening on the main thread and there is is parallel activity that should cause early termination. IT is the case that a lot of non-critical (where critical means rendering and main game loop) activities have been moved to separate threads as part of the performance changes, so it could be that something is tripping over itself and hence my belief that somewhere something is causing the viewer to crash and burn without any trace. But as I said this is mostly speculation right now.

-

1

1

-

.thumb.jpeg.10d3c7fb00d4e40e5b40468fd0d18942.jpeg)

Instagram

Instagram

Firestorm perfomance issues observations

in Second Life Viewer

Posted

Hi @Jackson Redstar,

Assuming that you have asked in the proper places (Firestorm Support group) and not progressed this, can you put this into a JIRA please. I can't manage issues that appear in the forums in any sensible manner.

It is worth noting that from the initial performance update to today there has only been one viewer change. the release of 6.6.8 (which is mostly a maintenance update in terms of rendering). You have not noted the viewer version, I'll assume 6.6.8 for now. You noted in other posts (prior to the latest release) that performance has changed for the worse, those would have been on 6.6.5 and presumably with the same viewer code. this would suggest something has changed outside of the viewer code itself. This could be many things, of course, Windows updates, network degrading (typically easily solved/dismissed by a router reboot) & AV changes being the most common external factors, then of course we have the weird and wonderful world of view settings, caches and so forth.

I am sure you have checked the obvious, normal first steps for all "slow" and "non-rezzing" which is that your whitelisting exclusions are stil being properly applied, this is not your first rodeo. However, as this accounts for well over 90% of the performance issues we troubleshoot; it is always worth double checking and even briefly disabling all your AV and Malware protection just to exclude those from the equation.